How's it like to have an enterprise server at home

HP DL380p Gen8 12 x LFF server, 2 x E5-2630, 48Gb ECC RAM, 2 x 750W Power Supplies

A couple of months ago my NAS died. An old Thecus 2 bay unit running two disks in RAID1. Being lazy, I relied on the fact that having the disks in a RAID array will protect me from drive failures and didn't have a backup. Big mistake! I had all the family's digital memories there: photos, videos, and backups of my machines. I did manage to recover some of them but I swore to never let it happen again!

So there I was, searching for a replacement, thinking that this time I'll go the Synology way, backup everything on an external USB drive and do everything by the book. Then HP DL380p happened. It's a Gen8 but hey, 160€ for the unit with no drives, my first enterprise server!

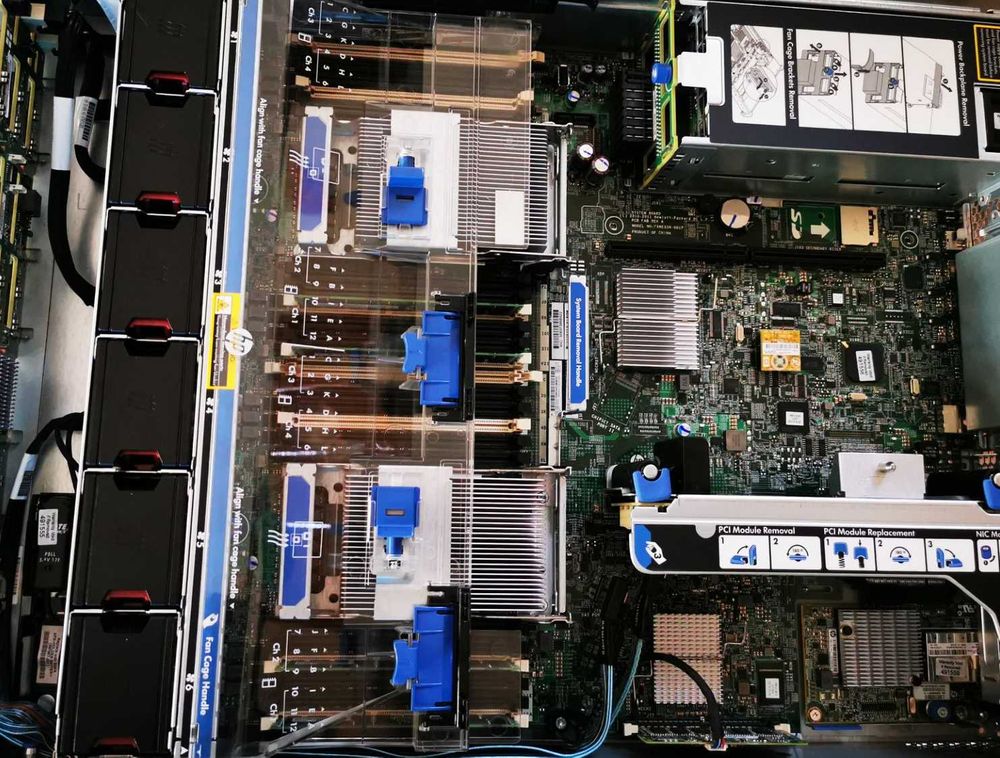

Not a single speck of dust! Running in a data-center surely makes a difference. It came with only 16GB of RAM, but eBay was like "hey, the ECC DIMMs are cheap, wanna spend some cash on 32GB"? I'm not strong enough to resist so I got 48GB of RAM now, because ZFS needs it, and so do I.

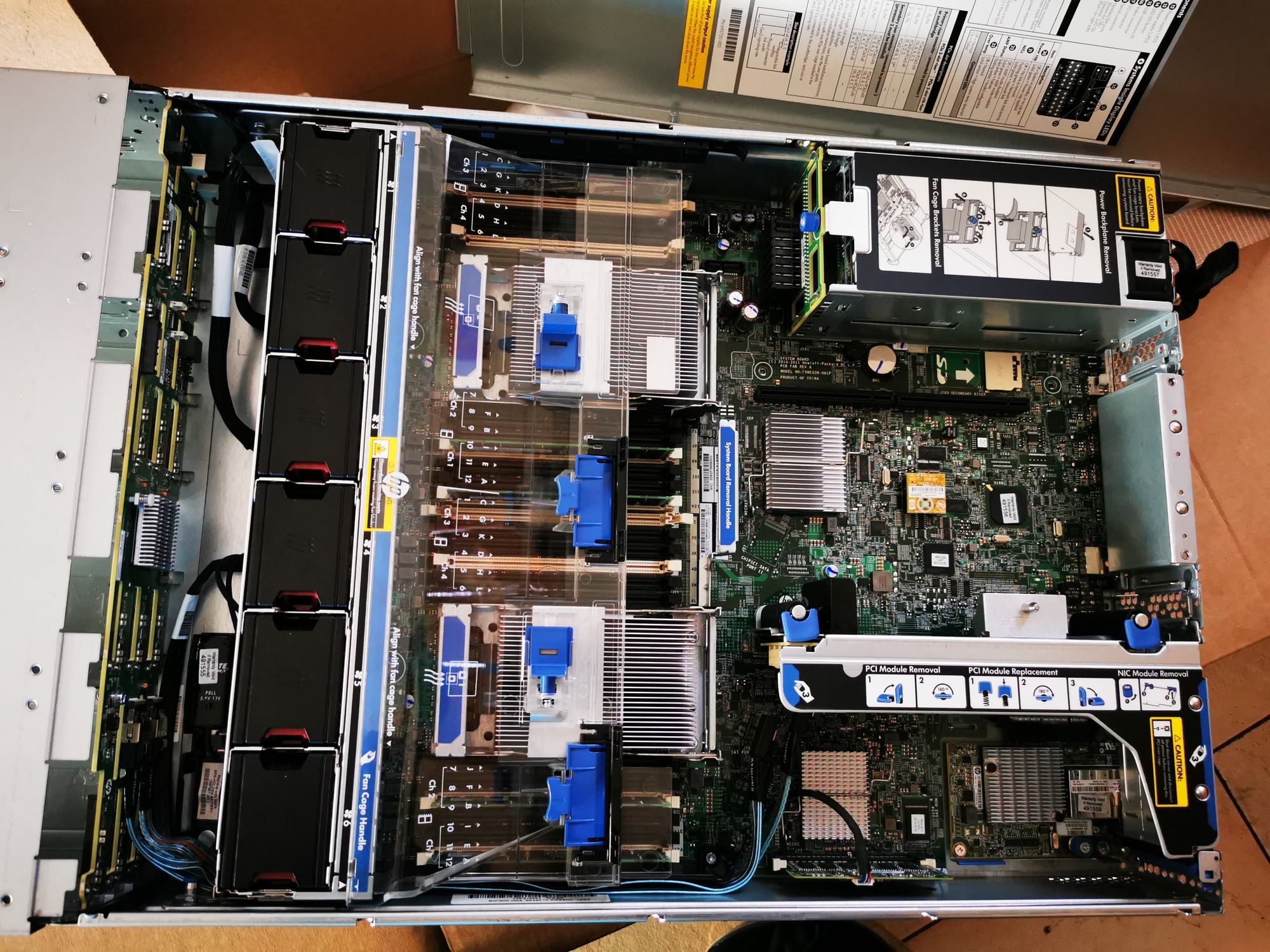

There was another problem in the front: it was empty. But I learned my lesson. eBay, rinse, repeat: drives and caddies this time. To finally make it look like in the picture below.

There are 12 trays with nice LEDs now. One that has an SSD for OS, 11 others that I filled with 2TB SAS drives (20€/disk, wonderful). Hardware part done!

As for the OS, I chose FreeNAS because it offered ZFS.

ZFS is unaffected by RAID hardware changes which affect many other systems. On many systems, if self-contained RAID hardware such as a RAID card fails, or the data is moved to another RAID system, the file system will lack information that was on the original RAID hardware, which is needed to manage data on the RAID array. This can lead to a total loss of data unless near-identical hardware can be acquired and used as a "stepping stone". Since ZFS manages RAID itself, a ZFS pool can be migrated to other hardware, or the operating system can be reinstalled, and the RAIDZ structures and data will be recognized and immediately accessible by ZFS again.

After I burned my fingers with the RAID failure, I wanted something like this. And after I settled with ZFS, when asked for the type of RAID I went ahead with RAIDZ2, because at this point all I care about is the safety of the system.

The redundancy of RAIDZ2 beats using mirrors. (If during a rebuild the surviving member of a mirror fails (the one disk in the pool that is taxed the most during rebuild) you lose your pool. With RAIDZ2 any second drive can fail and you are still OK.

So I ended up with a pool that has two disks worth of redundancy, and kept one drive as a cold spare, just in case.

I will not talk performance here, since it is not something that I'm chasing at this moment. But having it linked to a 1Gb network, I should say that I'm OK with what it offers in terms of throughput. I had to purchase an adapter to have a RJ45 for my network cable, it's this one from 10Gtek: 1G SFP RJ45 Mini-Gbic Module, 1000Base-T SFP Copper Transceiver. The server has two HP Ethernet 10Gb 530 FLR-SFP+ adapters for when I'm ready for 10Gb.

Noise is not an issue, since it's down in the basement and it was winter so it didn't have to cool itself that much. But I can tell you that it screams when booting.

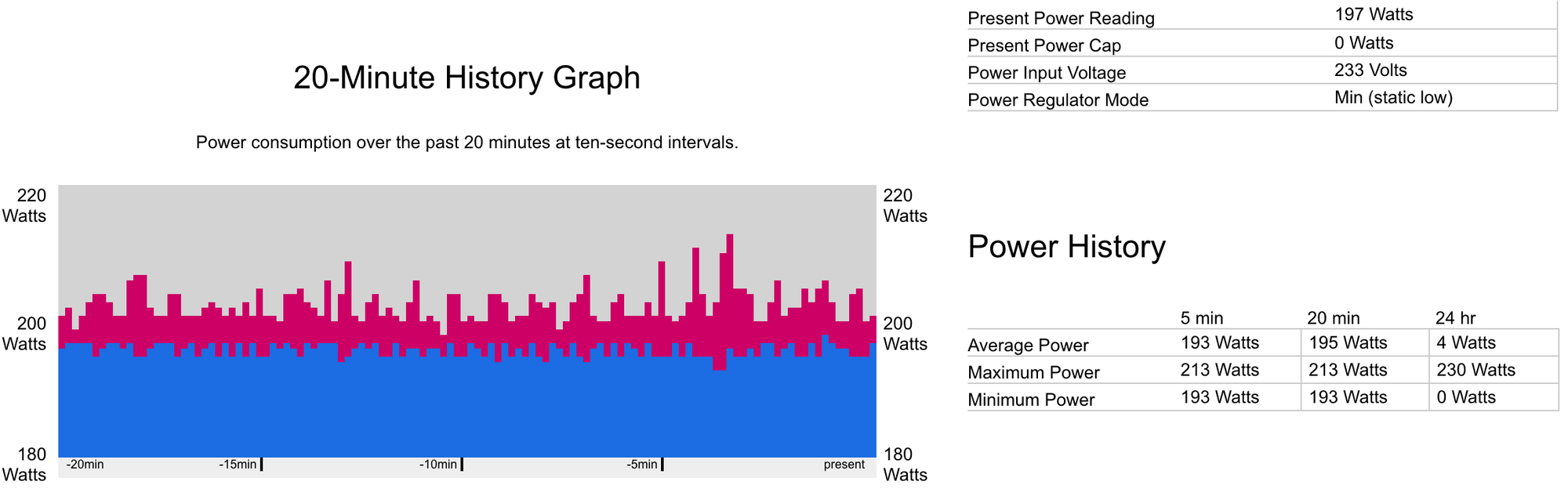

The only thing that scares me is the power consumption. I should have seen it coming since it's a Gen8 server but nonetheless, 200-230W it's a bit on the pricey side of things, coming at around 20€/month. But hey, it's a server after all!

Hardware specs:

2x Intel® Xeon® Processor E5-2630 (15M Cache, 2.30 GHz, 7.20 GT/s Intel® QPI)

48 GB of ECC RAM (4 x 8GB and 4 x 4GB)

2x HP 750W Common Slot Platinum Plus Hot-Plug Power Supplies

2x HP Ethernet 10Gb 2-port 530 FLR-SFP+

11x Hitachi 2TB SAS drives @ 7200RPM

1 TCSUNBOW 120GB SSD